How did I create a load-balancer in Nginx ?

The definitive guide to creating a load balancer in Nginx. This was basically part of my college project so I thought of sharing it with you

First Let's Understand What is Load Balancer?

Load balancing is the process of efficiently distributed network traffic across multiple servers also know as a server farm or server pool. By distributing the load evenly load balancing improves responsiveness and increases the availability of applications. It has become a necessity as applications become more complex, user demand grows and traffic volume increases. Load balancing is the most straightforward method of scaling out an application server infrastructure. As application demand increases, new servers can be easily added to the resource pool and the load balancer will immediately begin sending traffic to the new server.

In Layman's terms: Load balancer is the concept of handle/distribute the network calls/requests efficiently among the servers, so a load of network calls will get distributed so this way we won't have all the load only on one server

A load balancer is a device or software that distributes network or application traffic across a cluster of servers. By balancing requests across multiple servers, a load balancer reduces individual server load and prevents any one application server from becoming a single point of failure. (Note: having one load balancer in front of the server is also a single point of failure we will come to this part on how to avoid it.)

Why do we need a Load Balancer?

It is a part of system design concepts and system design uses the concept of computer science fundamentals like networking, distributed systems, and parallel computing, many companies like Google, Netflix, Microsoft, Bloomberg are using this concept.

Imagine you have an app that has millions of users requesting your app services but on the server-side, we need solid engineering to handle those network requests, and requests on the server should not be failed even if there is a hardware failure it should be redirected to an active server which could accept the request and immediately send the response. This is where the load balancer comes into the picture.

What are the core features of Load Balancer?

If a single server goes down, the load balancer removes that server from server group and redirects traffic to the remaining online servers

When a new server is added to the server group, the load balancer automatically starts to send requests to it.

Distributes clients requests or network load efficiently across multiple servers

Ensure high availability and reliability by sending request only to the online servers.

Provides the flexibility to add or subtract servers as demand dictates.

Can be added at multiple layers ( application server, databases, caches, etc) in the application stack

Can dynamically add or remove servers from the a group without interrupting existing connections.

Some Famous Load Balancing Algorithms

Different load balancing algorithms provide different benefits; the choice of load balancing method depends on your needs:

Round Robin - Requests are distributed across the group of servers sequentially.

Least Connections - A new request is sent to the server with the fewest current connections to clients. The relative computing capacity of each server is factored into determining which one has the least connections.

Least Time – Sends requests to the server selected by a formula that combines the fastest response time and fewest active connections. Exclusive to NGINX Plus.

Hash – Distributes requests based on a key you define, such as the client IP address or the request URL. NGINX Plus can optionally apply a consistent hash to minimize redistribution of loads if the set of upstream servers changes.

IP Hash - The IP address of the client is used to determine which server receives the request.

Random with Two Choices – Picks two servers at random and sends the request to the one that is selected by then applying the Least Connections algorithm (or for NGINX Plus the Least Time algorithm, if so configured).

What are the benefits of this?

- Reduce Downtime

- Scalable

- Redundancy

- Flexibility

- Efficiency

- Global Server Load Balancing

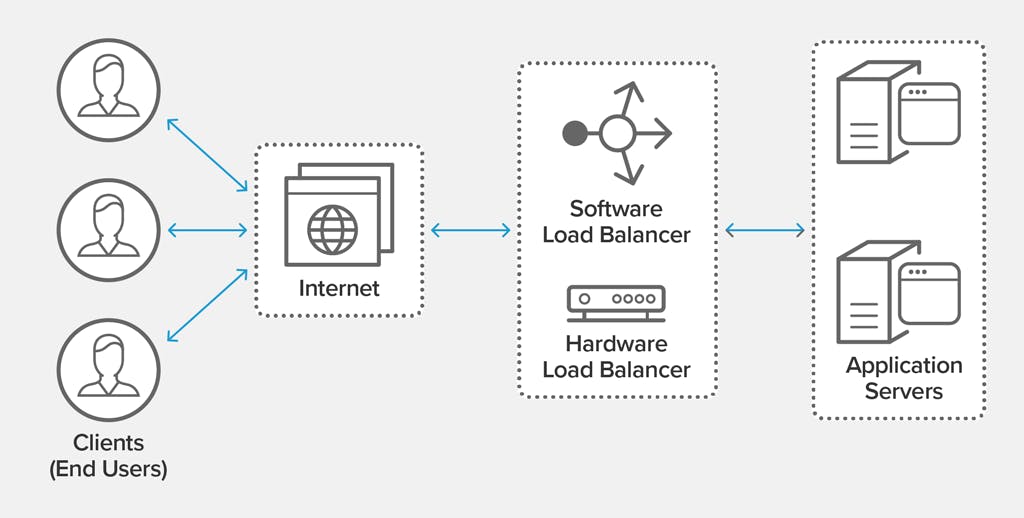

Hardware-level vs Software-Level Load Balancing

Load balancers typically come in two flavors: hardware-based and software-based.

Vendors of hardware-based solutions load proprietary software onto the machine they provide, which often uses specialized processors. To cope with increasing traffic to your website, you have to buy more or bigger machines from the vendor.

Software solutions generally run on commodity hardware, making them less expensive and more flexible. You can install the software on the hardware of your choice or in cloud environments like AWS EC2.

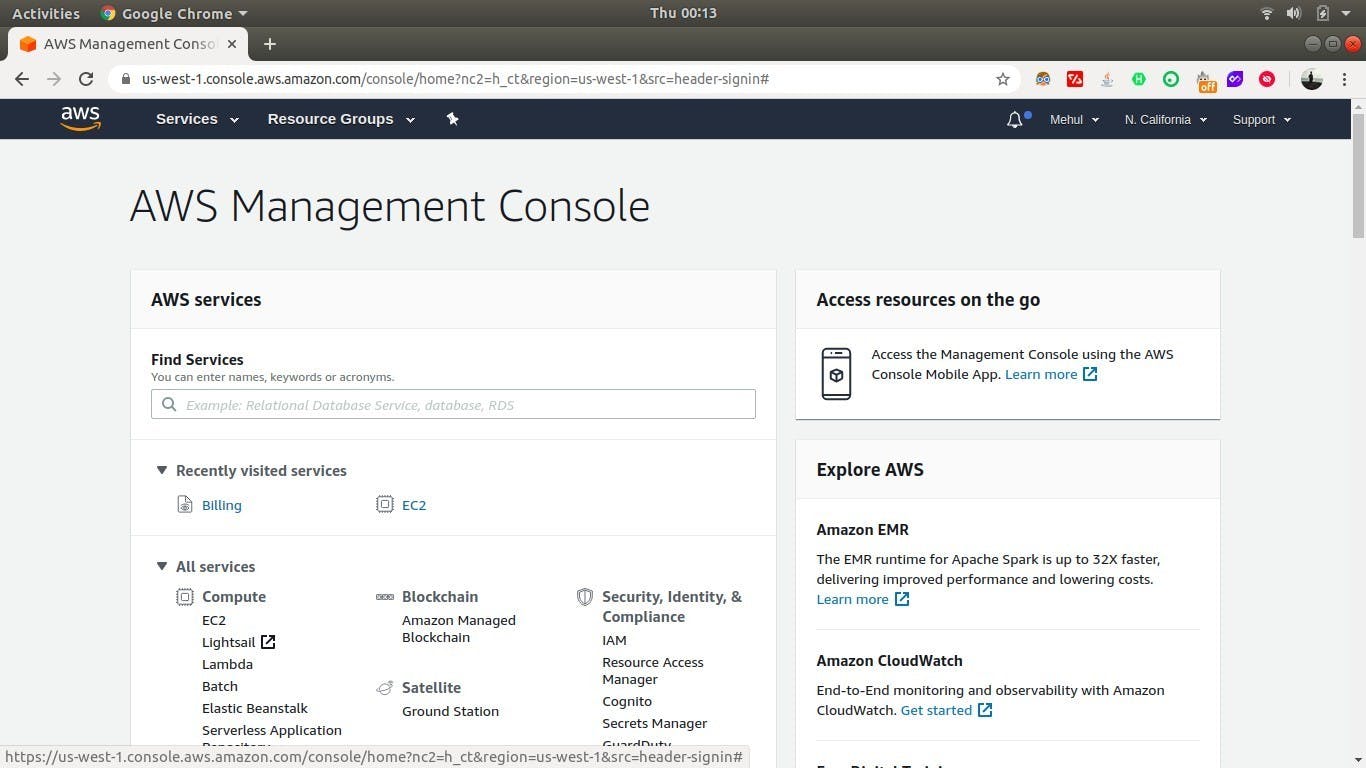

Before we start we will need AWS and we will be installing Nginx on every server

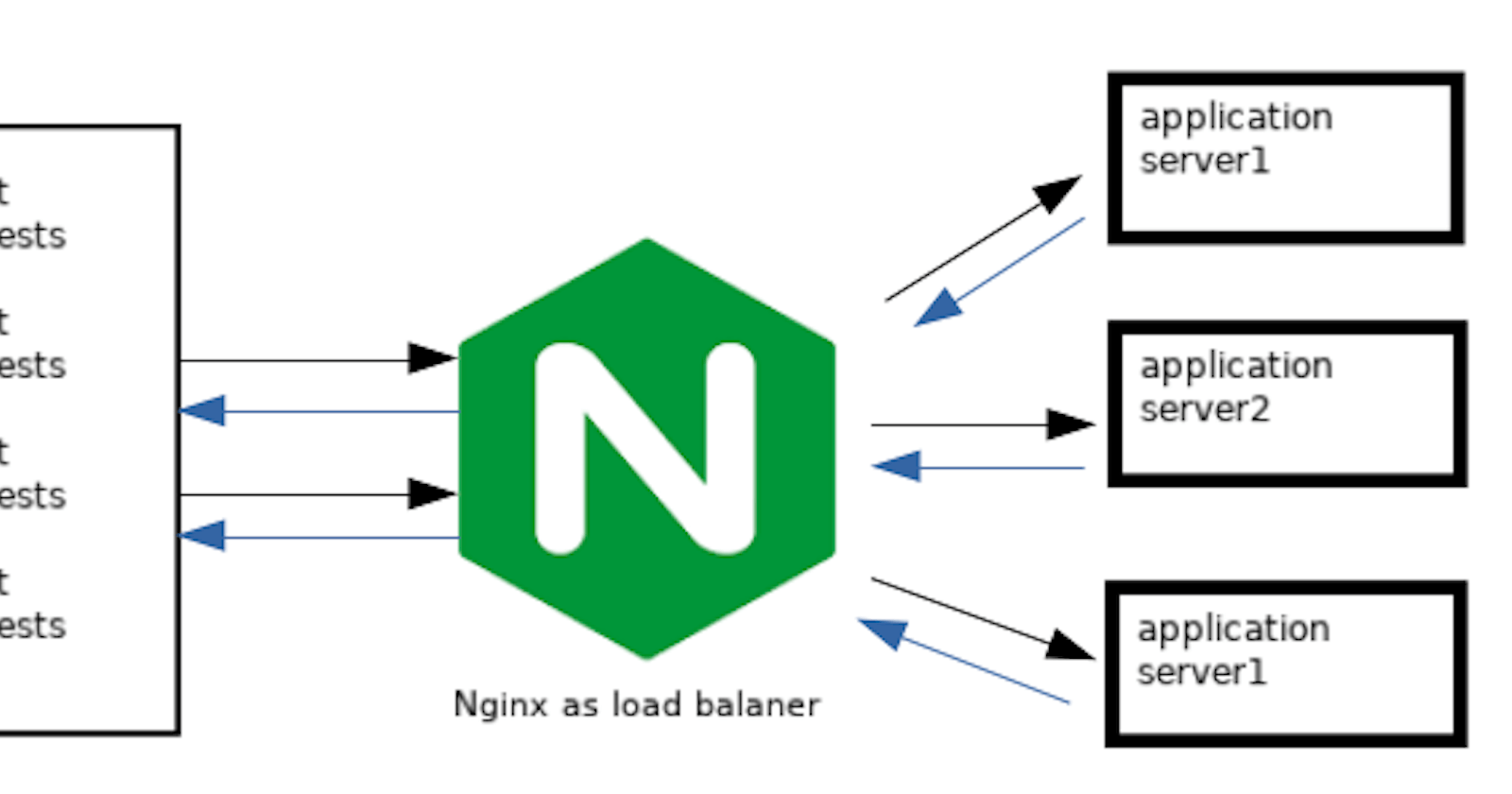

overview of how the project is going to work?

To create the load balancer in AWS we will need 2 or more server and 1 load balancer (you can have 2 load balancers also because a single load balancer causes a Single Point of Failure problem ) ex: if you have 2 servers then these 2 servers will be communicating with the load balancer as well as we can add more servers as we need, this will also know as hardware load balancing cause we go adding more servers with high performance and high I/O requests. Its also know as horizontal scaling in system designing So the load balancing algorithm considers such things before redirecting the request to an appropriate server machine.

To begin we will create 3 servers on AWS so 2 servers will act as serving files and 1 server will act as load balancer to route the request between 2 servers. The point behind the building, this load balancer distributes the large network call to different servers so only one server will not have much load on it as well as there is no single point of failure means suppose there are A & B servers and load balancer (nothing but server) and suppose if A goes down so then request coming to A would be a route to the Btherefore there is no single point of failure (SPOF).

Creating Servers and Load Balancer

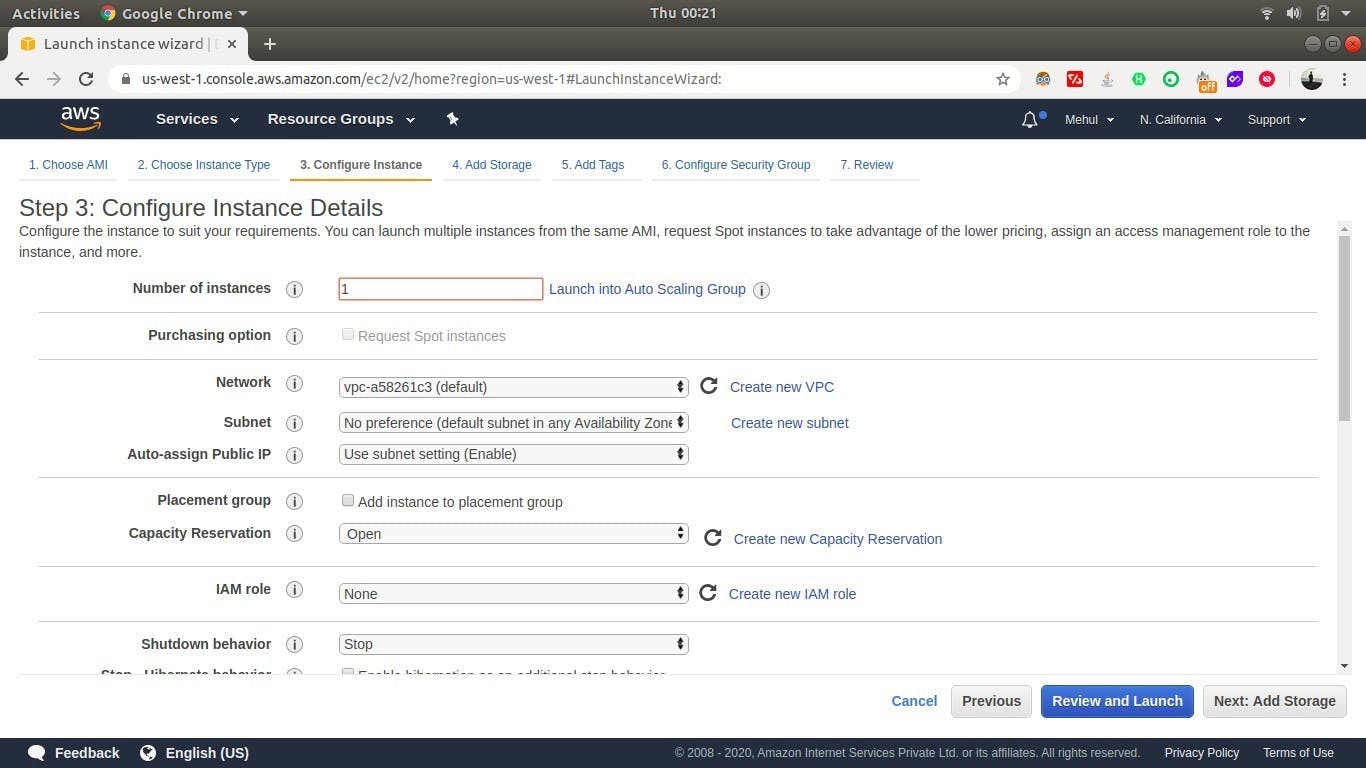

- Login to AWS ac and choose the region and click on ec2 instance.

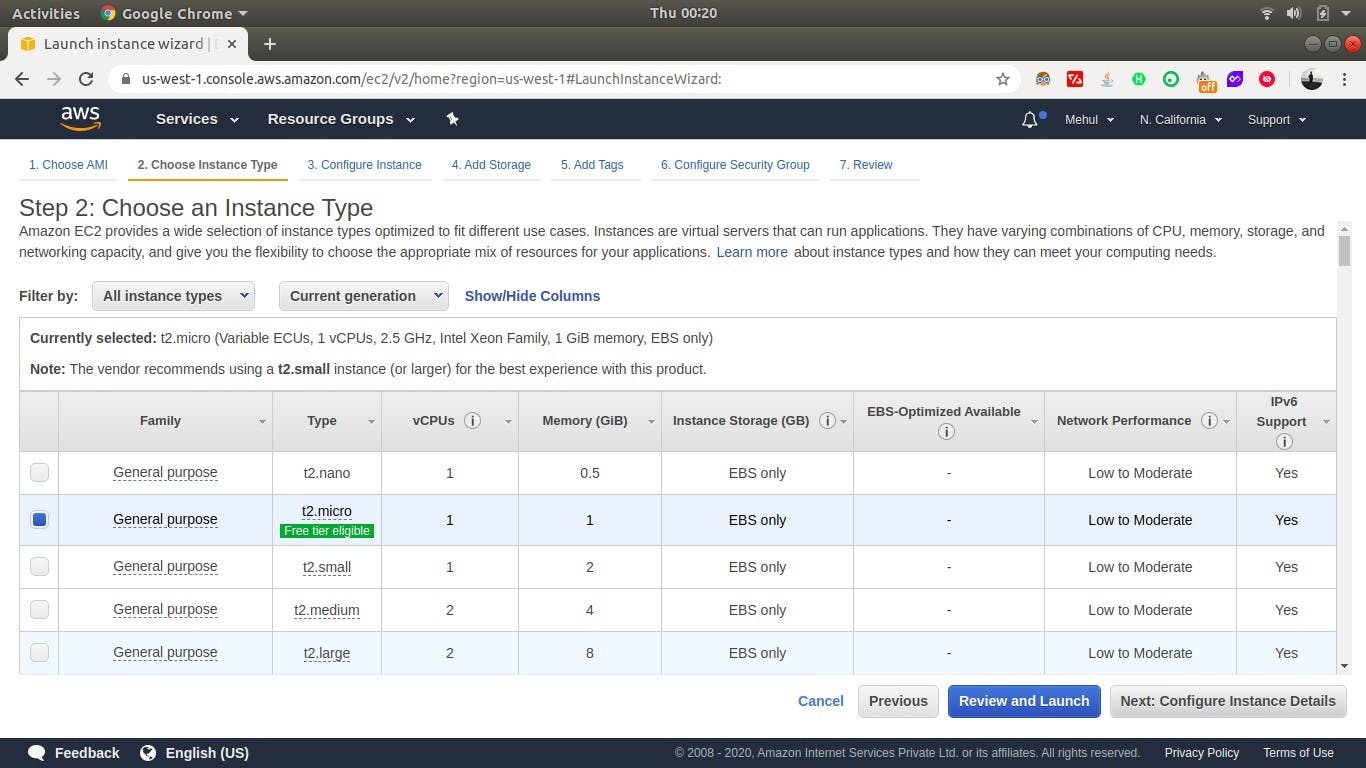

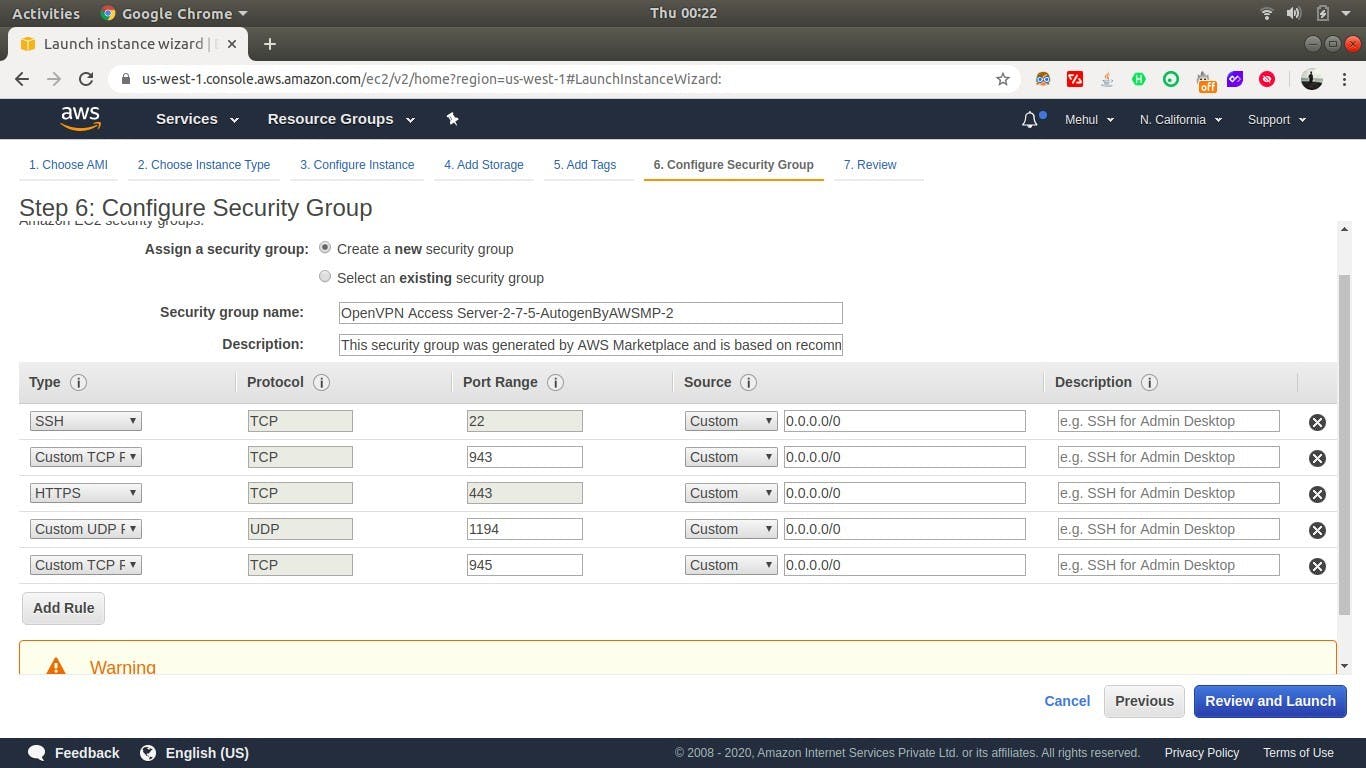

- After clicking ec2 server click on create ec2 instance and do the proper configuration and don't forget to add networks rules to get access to your application with any network protocols like SSH, HTTP, TCP, UDP, HTTPS, etc.

- here we can create N number of servers in the number of instances section so we don't have to repeat the process of creating servers. (Just add 3 to create 3 servers.)

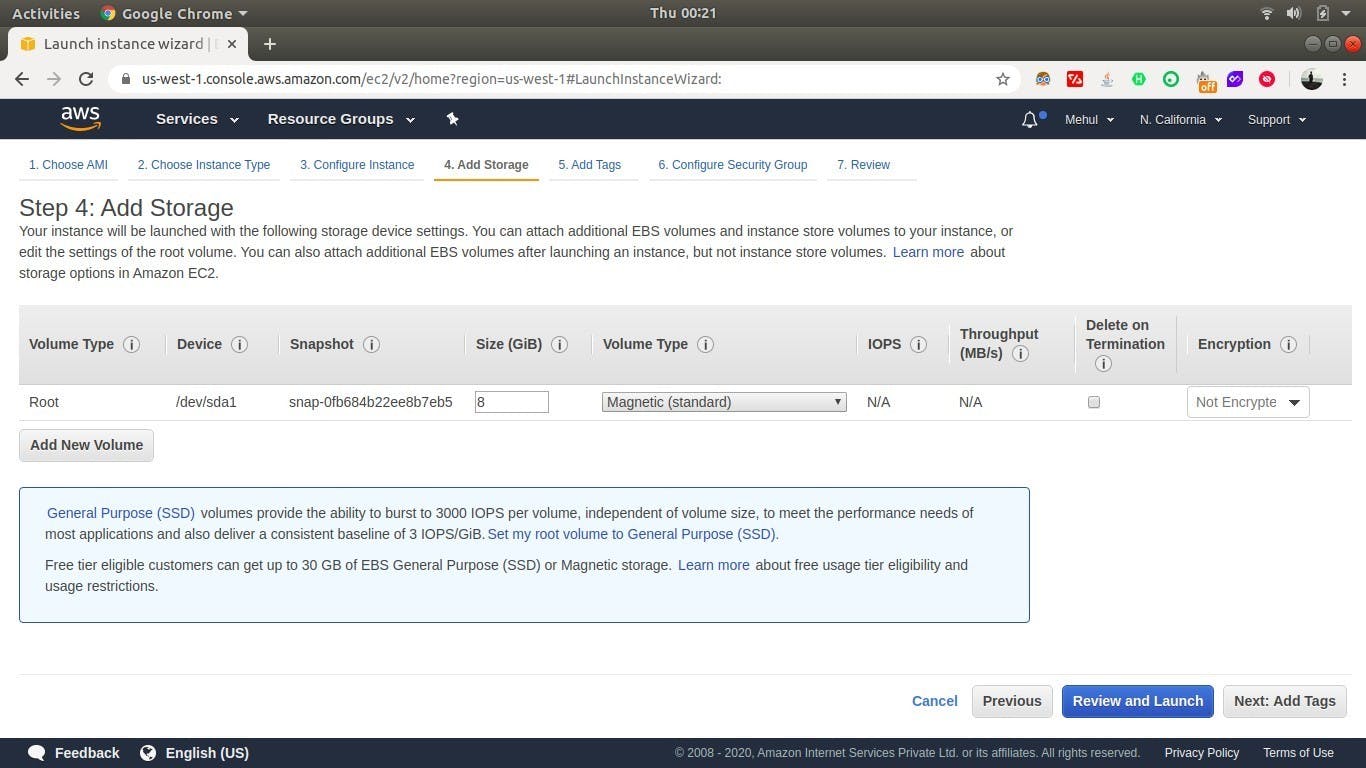

- Note: if you want your server state should remain the same even if you stop or restart just make sure to “uncheck delete on termination” so whatever you will create in that instance it will remain same unless you terminate/delete it explicitly (AWS will delete the server states means if you download some packages/files into your servers and if you restart or shut down it won't save the packages/files that you have downloaded into your OS if you don't uncheck it by default).

- Let's finish up the thing by just adding networks rules as you want

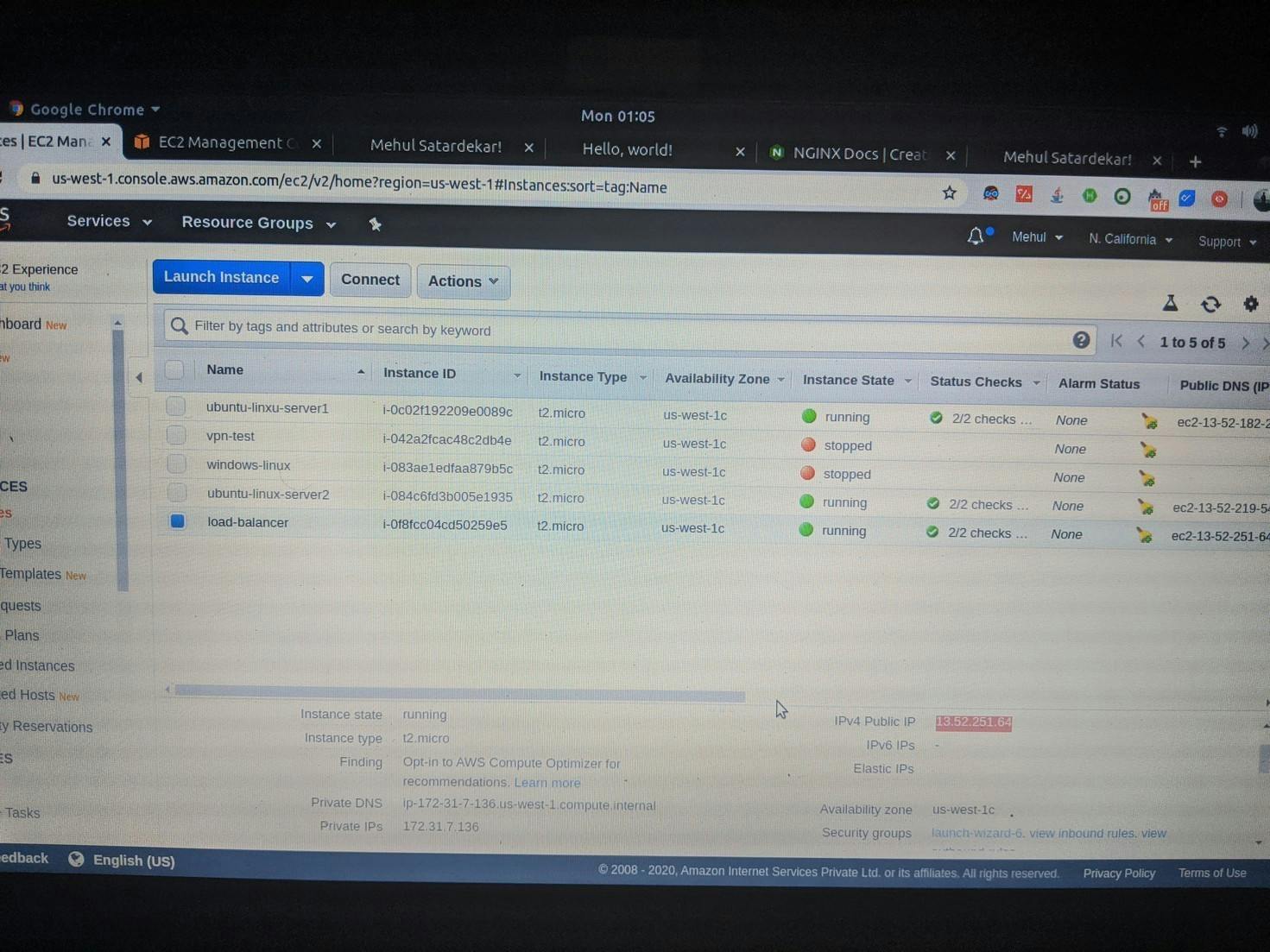

- here you will see the ec2 instance running.

Time To SSH into all Servers

Install the Nginx-extra (open-source Nginx version is fine too) in all servers using

sudo apt-get install nginx-extra

you can check the version of nginx by typing

nginx -v

let's start the nginx server

sudo systemctl start nginx

To check if nginx is running or not

sudo systemctl status nginx

Configure NGINX to serve your website

cd into /etc/nginx/. This is where the NGINX configuration files are located. The two directories we are interested in are sites-available and sites-enabled

sites-available contains individual configuration files for all of your possible static websites.

sites-enabled contains links to the configuration files that NGINX will actually read and run.

What we’re going to do is create a configuration file in sites-available, and then create a symbolic link (a pointer) to that file in sites-enabled to actually tell NGINX to run it. Create a file called mehul. com in the sites-available directory and add the following text to it:

server {

listen 80 default_server;

listen [::]:80 default_server; root /var/www/mehul.com;

index index.html; server_name mehul.com

www.mehul.com; location / {

try_files $uri $uri/ =404;

}

}

Now that the file is created, we’ll add it to the sites-enabled folder to tell NGINX to enable it. The syntax is as follows:

ln -s /etc/nginx/sites-available/mehul.com /etc/nginx/sitesenabled/mehul.com

now restart the nginx to make all the changes that we have made

sudo systemctl restart nginx

Now copy the public DNS (public IP) by clicking on a particular server of AWS console you will see the file is getting served by high performance Nginx server which is created on AWS ec2 instance

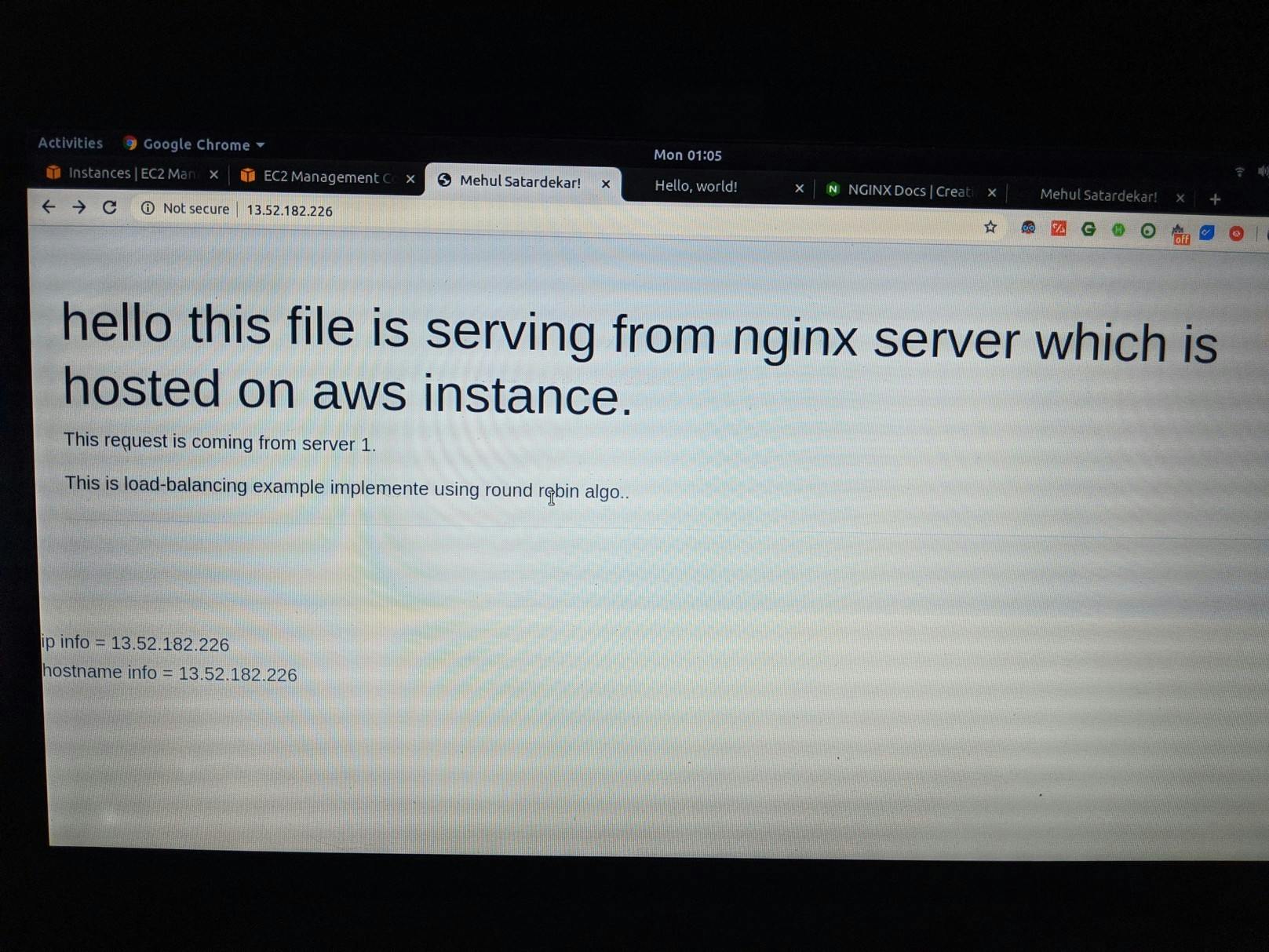

Server A (public IP of this server is 13.52.182.226)

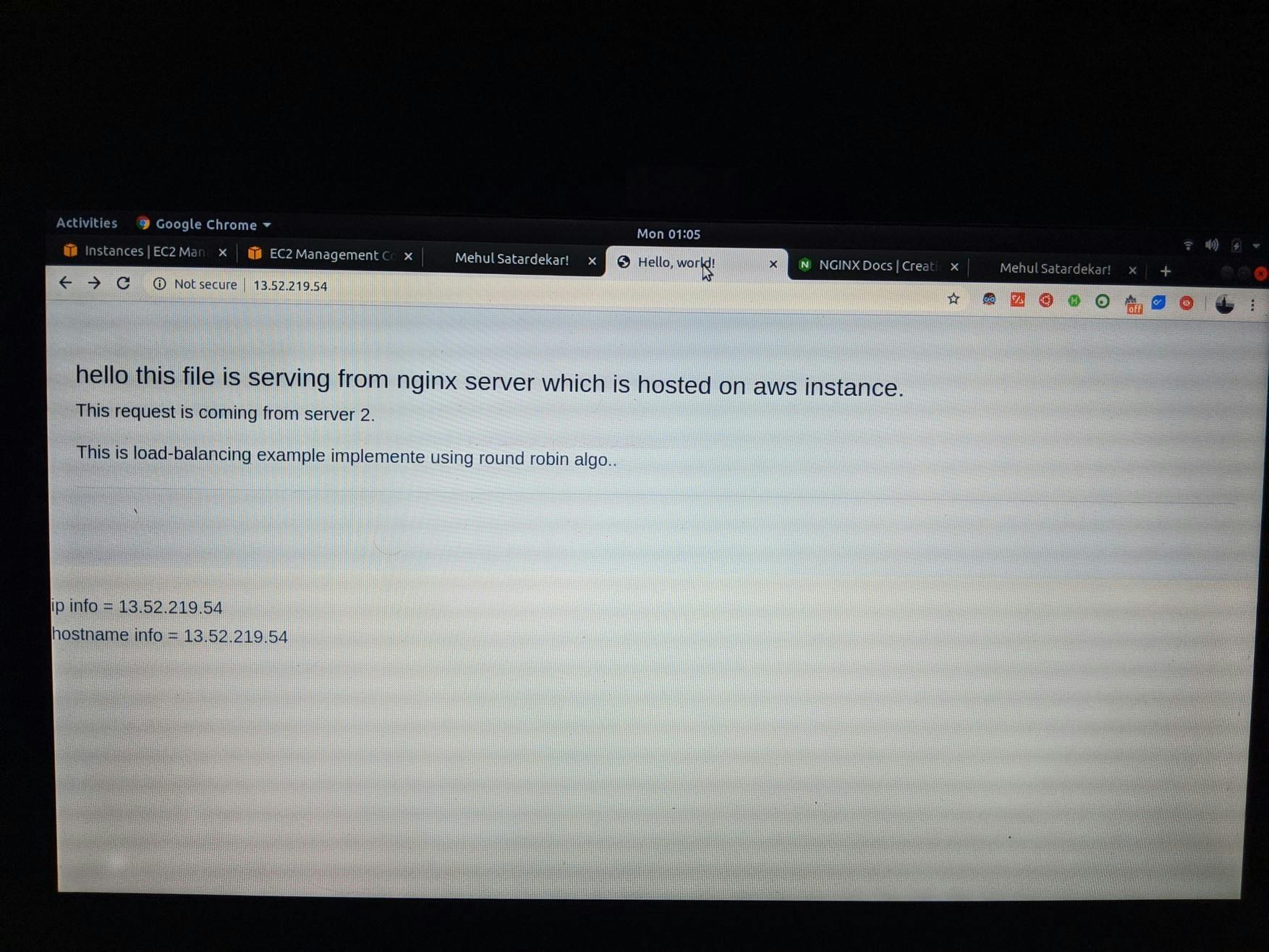

Server B (public IP of this server is 13.52.219.54)

Now configure the load balancer (Server 3)

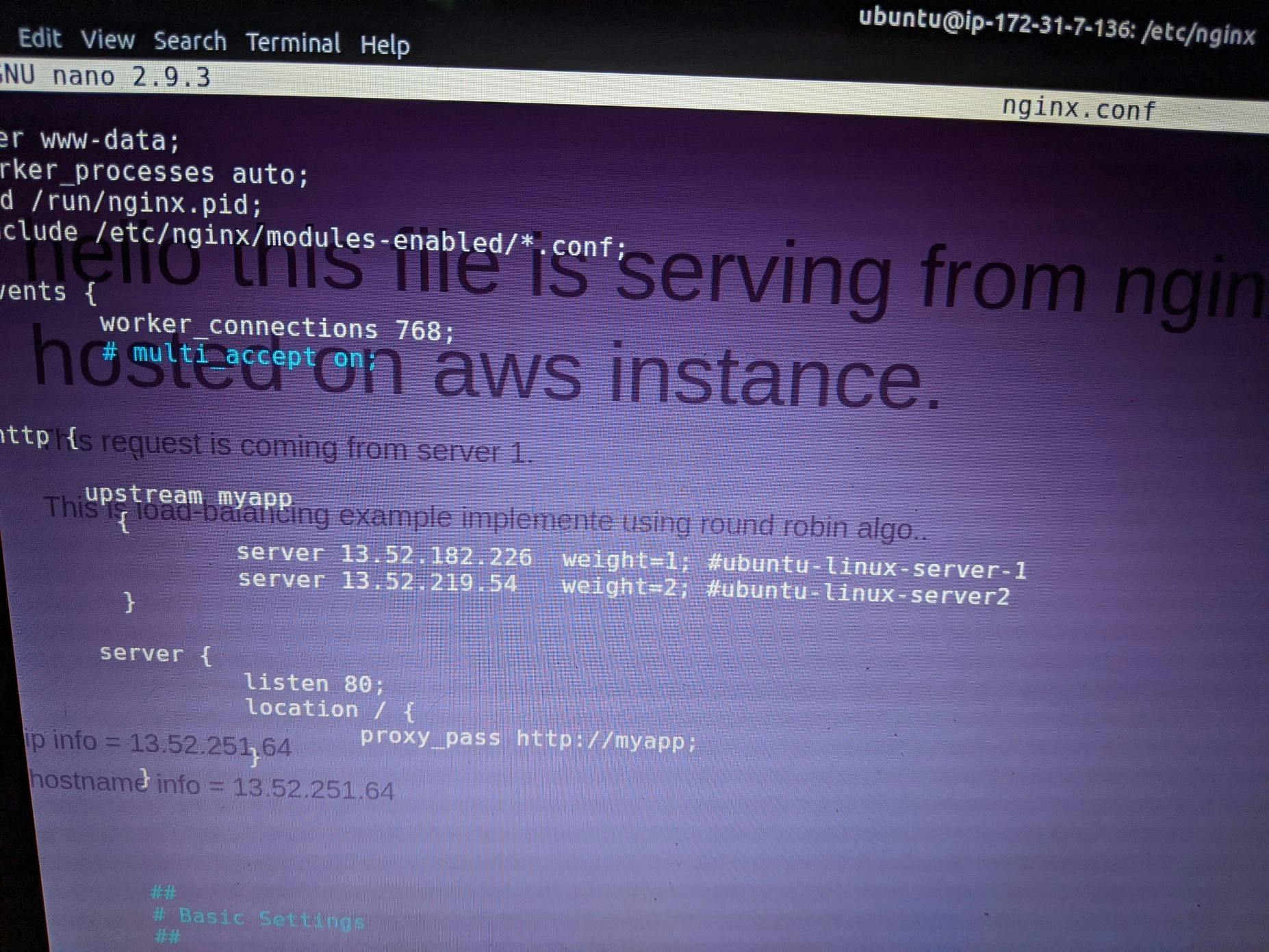

Now go to the nginx file path by changing cd /etc/nginx/ and change the nginx.conf file

worker_process auto;

include etc/nginx/modules-enabled/*.conf;

events {

worker_connections 768;

htttp{

upstream myapp

{

server 13.52.182.226 weight=2;

server 13.52.219.54 weight=1

}

server {

listen 80;

location / {

proxy_pass http://myapp

}

}

}

you can see the configuration here

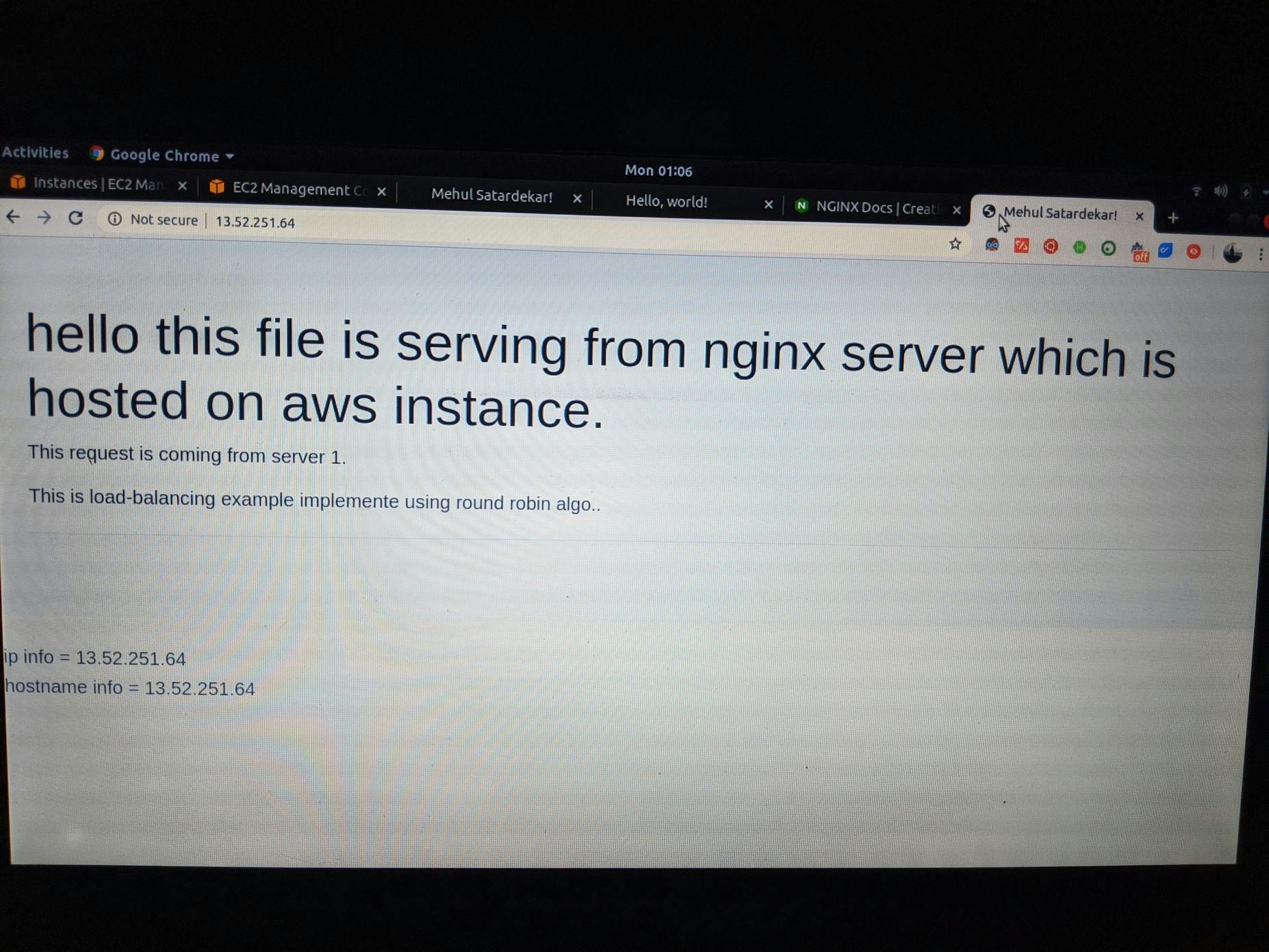

Now we have our own load balancer with a high-performance Nginx server we can just copy the server of public DNS and it will pass the request as you configure (we have used round-robin algorithm where it will pass the network requests as per the weight you have provided

Every time you refresh the page load balancer transfer the request to a different server since we have used a round-robin algorithm (Note: the more weight you have associated with a particular server the more requests will be transferred to that server.)

Things to be noted while you deploy your own load balancer

Not always round-robin algorithm well good for the load balancing if the server is hitting more than 550k or more than this of-course there are different algorithms like least connection or IP hashing etc they can handle the request very efficiently

While deploying an app on Nginx we need to keep in mind that the security which Nginx provides, of course, Nginx comes with good security better than apache,IIS but if you are not using some modules in Nginx it's better to have removed those Nginx modules.

Switch to the TLS instead of SSH protocols.

The “server_tokens” directive is responsible for displaying the Nginx version number and Operating system on error pages and in the “Server” HTTP response header field so comment out the server tokens line using “#” cause attackers will get to know that what OS and servers they are using.

Coming to Single Point Of Failure (SPOF) there are multiple things how to tackle that situation but I am storing that for another blog. in that blog I will be covering how to avoid this problem with an example 😉